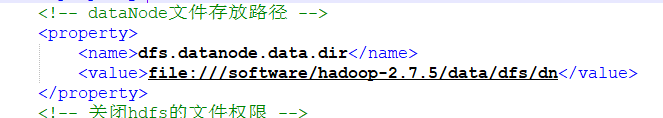

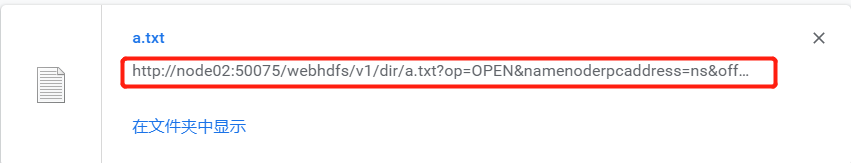

完整内容: 注: 这里ONBOOT设置成yes,BOOTPROTO改为static,由自动分配改成静态ip,然后就是配置静态ip、网关、子网掩码、DNS.其它内容,三台虚拟机一致,IPADDR由137-139依次分配。 问题: 刚开始我的网关设置的是192.168.24.1.结果重启虚拟机和重启网卡都没有用,还是一样ping 不通8.8.8.8,也ping不通百度。 解决: 编辑-虚拟网络编辑器,进入界面。选择自己的虚拟网卡,你就看子网地址跟你设置的ip是不是同一个网段。然后点击NAT设置。 可以看到子网掩码是255.255.255.0,网关ip是192.168.24.2,而不是我刚开始认为的192.168.24.1。于是我重新对/etc/sysconfig/network-scripts/ifcfg-ens33进行编辑,将网关地址改成192.168.24.2,然后重启网卡。 注:使用命令编辑:vim /ect/sysconfig/network修改内容:HOSTNAME=node01,在Linux Centos7.5中好像并不适用。因此,在这里我使用命令:hostnamectl set-hostname node01或者直接使用vim /etc/hostname来修改。 修改完毕,需要重启方可生效。可以使用命令reboot. 第一步:三台机器生成公钥与私钥 在三台机器执行以下命令,生成公钥与私钥。命令如下:ssh-keygen -t rsa 第二步:拷贝公钥到同一台机器 将三台机器将拷贝公钥到第一台机器,三台机器执行命令: ssh-copy-id node01 第三步:复制第一台机器的认证到其他机器 将第一台机器(192.168.24.137)的公钥拷贝到其他机器(192.168.24.138,192.168.24.139)上,在第一台机器上面使用以下命令: scp /root/.ssh/authorized_keys node02:/root/.ssh scp /root/.ssh/authorized_keys node03:/root/.ssh1.6 可以使用如下命令,在三台虚拟机直接互相检测,是否免密登录了: 注:如果在使用yum install ….命令时,遇到如下错误: 可以使用此命令解决: 如果想要将Linux CentOS的yum源更换为国内yum源,可以使用如下命令: 阿里云镜像: 163镜像 : 第一台机器(192.168.24.37)上面执行以下两个命令: ps: 这里我的node1节点中的jdk1.8已经安装配置好了,可以参考:jdk安装 执行完毕后,可以在node2、node3节点上查看,可以发现自动创建了/software/jdk1.8.0_241/目录,并将node1节点的jdk安装包传输到了node2和node3.然后在node2和node3节点中使用如下命令配置jdk: /etc/profile 新增内容: 下载网址如下:zookeeper下载地址,我使用的zk版本为3.4.9。可以使用wget下载。 第一台机器(node1)修改配置文件 vim zoo.cfg: (新增部分) 在第一台机器(node1)的/software/zookeeper-3.4.9/zkdatas /这个路径下创建一个文件,文件名为myid ,文件内容为1,使用命令: 安装包分发到其他机器 第一台机器(node1)上面执行以下两个命令 第二台机器上修改myid的值为2 第三台机器上修改myid的值为3 如图: 使用完全分布式,实现namenode高可用,ResourceManager的高可用 192.168.24.137 192.168.24.138 192.168.24.139 zookeeper zk zk zk HDFS JournalNode JournalNode JournalNode NameNode NameNode ZKFC ZKFC DataNode DataNode DataNode YARN ResourceManager ResourceManager NodeManager NodeManager NodeManager MapReduce JobHistoryServer 这里我并不直接使用hadoop提供的包,而使用自己编译过后的hadoop的包。停止之前的hadoop集群的所有服务,并删除所有机器的hadoop安装包. 附: 可以使用notepad++插件:NppFtp来对远程服务器中文件进行编辑: 在下列小图标中找到Show NppFTP Window,结果并未找到。 点击插件-插件管理-搜索nppftp-勾选-安装。 再次打开就会多一个小图标:点击connect,现在就可以对远程服务器文件进行编辑了。 不过这里呢,我不使用notepad++插件:NppFtp,我使用MobaXterm对远程服务器文件进行编辑。 4.2.1 修改 core-site.xml 4.2.2 修改 hdfs-site.xml: 4.2.3 修改yarn-site.xml 注:node03与node02配置不同 4.2.4 修改mapred-site.xml 4.2.5 修改slaves 4.2.6 修改hadoop-env.sh 4.2.7 将第一台机器(node1)hadoop的安装包发送到其他机器上 4.2.8 创建目录(三台虚拟机都创建) 如图: 4.2.9 更改node02、node03 节点中的yarn-site.xml node01: 注掉yarn.resourcemanager.ha.id这一部分 node02: node03: node01机器执行以下命令: 如果在执行命令时,遇到如下问题,那么就是虚拟机免密码登录没有配置,或者配置的有问题: node02机器执行以下命令: node02、node03机器执行以下命令: 启动过程中,如果遇到上面的报错信息,则使用下面命令解决: 网上的大部分博客(不推荐使用,好像有问题)是如下命令:会提示This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh 不过命令已经废弃了,现在使用: 注:使用jps命令查看三台虚拟机: 问题:这是有问题的,三台虚拟机中均少了NameNode,NameNode没有起来 node01: node02: node03: 解决: (1)首先使用stop-dfs.sh和stop-yarn.sh将服务停掉:(在任意节点执行即可) (2)删除dataNode数据存放路径中的文件(三台虚拟机中都要删除) 根据配置,所以需要删除/software/hadoop-2.7.5/data/dfs/dn下的文件: (3)使用start-dfs.sh和start-yarn.sh将服务再次启动(任意节点即可) 再次使用jps查看三台虚拟机:(此时才是正确的) node01: node02: node03: node03上面执行: node02上面执行: node03: node01: (部分截图) 浏览器访问:https://192.168.24.137:50070/dfshealth.html#tab-overview node02:(部分截图) 浏览器访问:https://192.168.24.138:50070/dfshealth.html#tab-overview 浏览器访问:https://192.168.24.139:8088/cluster/nodes 删除文件: 创建文件夹: 上传文件: 截图: 注: 点击Download实际上给我访问的是https://node02:50075….什么的。如果不配置hosts,是打不开的,将node02改成ip就可以了,虚拟机中我用的是node01,node02,node3可以直接访问的。 于是我改变宿主机的hosts文件,与虚拟机的hosts改成一致。 再次点击Download可以直接浏览器下载了。 至此,hadoop分布式环境搭建成功。

1.Linux环境准备

1.1 关闭防火墙(三台虚拟机均执行)

firewall-cmd --state #查看防火墙状态 systemctl start firewalld.service #开启防火墙 systemctl stop firewalld.service #关闭防火墙 systemctl disable firewalld.service #禁止开机启动防火墙 1.2 配置静态IP地址(三台虚拟机均执行)

[root@node01 ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE="Ethernet" PROXY_METHOD="none" BROWSER_ONLY="no" BOOTPROTO="static" DEFROUTE="yes" IPV4_FAILURE_FATAL="no" IPV6INIT="yes" IPV6_AUTOCONF="yes" IPV6_DEFROUTE="yes" IPV6_FAILURE_FATAL="no" IPV6_ADDR_GEN_MODE="stable-privacy" NAME="ens33" UUID="a5a7540d-fafb-47c8-bd59-70f1f349462e" DEVICE="ens33" ONBOOT="yes" IPADDR="192.168.24.137" GATEWAY="192.168.24.2" NETMASK="255.255.255.0" DNS1="8.8.8.8"

[root@node01 yum.repos.d]# netstat -rn Kernel IP routing table Destination Gateway Genmask Flags MSS Window irtt Iface 0.0.0.0 192.168.24.2 0.0.0.0 UG 0 0 0 ens33 169.254.0.0 0.0.0.0 255.255.0.0 U 0 0 0 ens33 192.168.24.0 0.0.0.0 255.255.255.0 U 0 0 0 ens33 [root@node01 yum.repos.d]# vim /etc/sysconfig/network-scripts/ifcfg-ens33 [root@node01 yum.repos.d]# systemctl restart network [root@node01 yum.repos.d]# ping 8.8.8.8 PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data. 64 bytes from 8.8.8.8: icmp_seq=1 ttl=128 time=32.3 ms 64 bytes from 8.8.8.8: icmp_seq=2 ttl=128 time=32.9 ms 64 bytes from 8.8.8.8: icmp_seq=3 ttl=128 time=31.7 ms 64 bytes from 8.8.8.8: icmp_seq=4 ttl=128 time=31.7 ms 64 bytes from 8.8.8.8: icmp_seq=5 ttl=128 time=31.7 ms 1.3 修改hostname(三台虚拟机均修改)

1.4 设置ip和域名映射(三台虚拟机均修改.新增部分)

192.168.24.137 node01 node01.hadoop.com 192.168.24.138 node02 node02.hadoop.com 192.168.24.139 node03 node03.hadoop.com 1.5 三台机器机器免密码登录(三台虚拟机均修改)

为什么要免密登录 - Hadoop 节点众多, 所以一般在主节点启动从节点, 这个时候就需要程序自动在主节点登录到从节点中, 如果不能免密就每次都要输入密码, 非常麻烦 - 免密 SSH 登录的原理 1. 需要先在 B节点 配置 A节点 的公钥 2. A节点 请求 B节点 要求登录 3. B节点 使用 A节点 的公钥, 加密一段随机文本 4. A节点 使用私钥解密, 并发回给 B节点 5. B节点 验证文本是否正确

[root@node02 ~]# ssh-copy-id node01 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" The authenticity of host 'node01 (192.168.24.137)' can't be established. ECDSA key fingerprint is SHA256:GzI3JXtwr1thv7B0pdcvYQSpd98Nj1PkjHnvABgHFKI. ECDSA key fingerprint is MD5:00:00:7b:46:99:5e:ff:f2:54:84:19:25:2c:63:0a:9e. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@node01's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'node01'" and check to make sure that only the key(s) you wanted were added.

[root@node01 ~]# scp /root/.ssh/authorized_keys node02:/root/.ssh The authenticity of host 'node02 (192.168.24.138)' can't be established. ECDSA key fingerprint is SHA256:GzI3JXtwr1thv7B0pdcvYQSpd98Nj1PkjHnvABgHFKI. ECDSA key fingerprint is MD5:00:00:7b:46:99:5e:ff:f2:54:84:19:25:2c:63:0a:9e. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'node02,192.168.24.138' (ECDSA) to the list of known hosts. root@node02's password: authorized_keys 100% 786 719.4KB/s 00:00 [root@node01 ~]# scp /root/.ssh/authorized_keys node03:/root/.ssh The authenticity of host 'node03 (192.168.24.139)' can't be established. ECDSA key fingerprint is SHA256:TyZdob+Hr1ZX7WRSeep1saPljafCrfto9UgRWNoN+20. ECDSA key fingerprint is MD5:53:64:22:86:20:19:da:51:06:f9:a1:a9:a8:96:4f:af. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'node03,192.168.24.139' (ECDSA) to the list of known hosts. root@node03's password: authorized_keys 100% 786 692.6KB/s 00:00

[root@node02 hadoop-2.7.5]# cd ~/.ssh [root@node02 .ssh]# ssh node01 Last login: Thu Jun 11 10:12:27 2020 from 192.168.24.1 [root@node01 ~]# ssh node02 Last login: Thu Jun 11 14:51:58 2020 from node031.6 三台机器时钟同步(三台虚拟机均执行)

为什么需要时间同步 - 因为很多分布式系统是有状态的, 比如说存储一个数据, A节点 记录的时间是 1, B节点 记录的时间是 2, 就会出问题

## 安装 [root@node03 ~]# yum install -y ntp ## 启动定时任务 [root@node03 ~]# crontab -e no crontab for root - using an empty one crontab: installing new crontab ## 文件中添加如下内容: */1 * * * * /usr/sbin/ntpdate ntp4.aliyun.com;

/var/run/yum.pid 已被锁定,PID 为 5396 的另一个程序正在运行。 Another app is currently holding the yum lock; waiting for it to exit... 另一个应用程序是:yum 内存: 70 M RSS (514 MB VSZ) 已启动: Thu Jun 11 10:02:10 2020 - 18:48之前 状态 :跟踪/停止,进程ID:5396 Another app is currently holding the yum lock; waiting for it to exit... 另一个应用程序是:yum 内存: 70 M RSS (514 MB VSZ) 已启动: Thu Jun 11 10:02:10 2020 - 18:50之前 状态 :跟踪/停止,进程ID:5396 ^Z [1]+ 已停止 yum install -y ntp

[root@node03 ~]# rm -f /var/run/yum.pid

#备份 cp /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup #如果你的centos 是 5 wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-5.repo #如果你的centos是6 wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-6.repo yum clean all yum makecache

cp /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.163.com/.help/CentOS5-Base-163.repo wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.163.com/.help/CentOS6-Base-163.repo yum clean all yum makecache 2.安装jdk

2.1 安装包分发到其他机器

[root@node01 software]# ls hadoop-2.7.5 jdk1.8.0_241 zookeeper-3.4.9 zookeeper-3.4.9.tar.gz [root@node01 software]# java -version java version "1.8.0_241" Java(TM) SE Runtime Environment (build 1.8.0_241-b07) Java HotSpot(TM) 64-Bit Server VM (build 25.241-b07, mixed mode) [root@node01 software]# scp -r /software/jdk1.8.0_241/ node02:/software/jdk1.8.0_241/ root@node02's password: (省略.....) [root@node01 software]# scp -r /software/jdk1.8.0_241/ node03:/software/jdk1.8.0_241/ root@node03's password: (省略.....)

[root@node02 software]# vim /etc/profile [root@node02 software]# source /etc/profile [root@node02 software]# java -version java version "1.8.0_241" Java(TM) SE Runtime Environment (build 1.8.0_241-b07) Java HotSpot(TM) 64-Bit Server VM (build 25.241-b07, mixed mode)

export JAVA_HOME=/software/jdk1.8.0_241 export CLASSPATH="$JAVA_HOME/lib" export PATH="$JAVA_HOME/bin:$PATH"3.zookeeper集群安装

服务器IP

主机名

myid的值

192.168.174.100

node01

1

192.168.174.110

node02

2

192.168.174.120

node03

3

3.1 下载zookeeeper的压缩包

3.2 解压

[root@node01 software]# tar -zxvf zookeeper-3.4.9.tar.gz [root@node01 software]# ls hadoop-2.7.5 jdk1.8.0_241 zookeeper-3.4.9 zookeeper-3.4.9.tar.gz 3.3 修改配置文件

cd /software/zookeeper-3.4.9/conf/ cp zoo_sample.cfg zoo.cfg mkdir -p /software/zookeeper-3.4.9/zkdatas/

dataDir=/software/zookeeper-3.4.9/zkdatas # 保留多少个快照 autopurge.snapRetainCount=3 # 日志多少小时清理一次 autopurge.purgeInterval=1 # 集群中服务器地址 server.1=node01:2888:3888 server.2=node02:2888:3888 server.3=node03:2888:3888 3.4 添加myid配置

echo 1 > /software/zookeeper-3.4.9/zkdatas/myid 3.5 安装包分发并修改myid的值

[root@node01 conf]# scp -r /software/zookeeper-3.4.9/ node02:/software/zookeeper-3.4.9/ root@node02's password: (省略.....) [root@node01 conf]# scp -r /software/zookeeper-3.4.9/ node03:/software/zookeeper-3.4.9/ root@node03's password: (省略.....)

echo 2 > /software/zookeeper-3.4.9/zkdatas/myid

echo 3 > /software/zookeeper-3.4.9/zkdatas/myid3.6 三台机器启动zookeeper服务(三台虚拟机均执行)

#启动 /software/zookeeper-3.4.9/bin/zkServer.sh start #查看启动状态 /software/zookeeper-3.4.9/bin/zkServer.sh status

4、安装配置hadoop

4.1 Linux centos7.5 编译hadoop源码

[root@localhost software]# cd /software/hadoop-2.7.5-src/hadoop-dist/target [root@localhost target]# ls antrun hadoop-2.7.5.tar.gz javadoc-bundle-options classes hadoop-dist-2.7.5.jar maven-archiver dist-layout-stitching.sh hadoop-dist-2.7.5-javadoc.jar maven-shared-archive-resources dist-tar-stitching.sh hadoop-dist-2.7.5-sources.jar test-classes hadoop-2.7.5 hadoop-dist-2.7.5-test-sources.jar test-dir [root@localhost target]# cp -r hadoop-2.7.5 /software [root@localhost target]# cd /software/ [root@localhost software]# ls apache-maven-3.0.5 findbugs-1.3.9.tar.gz jdk1.7.0_75 protobuf-2.5.0 apache-maven-3.0.5-bin.tar.gz hadoop-2.7.5 jdk-7u75-linux-x64.tar.gz protobuf-2.5.0.tar.gz apache-tomcat-6.0.53.tar.gz hadoop-2.7.5-src mvnrepository snappy-1.1.1 findbugs-1.3.9 hadoop-2.7.5-src.tar.gz mvnrepository.tar.gz snappy-1.1.1.tar.gz [root@localhost software]# cd hadoop-2.7.5 [root@localhost hadoop-2.7.5]# ls bin etc include lib libexec LICENSE.txt NOTICE.txt README.txt sbin share [root@localhost hadoop-2.7.5]# cd etc [root@localhost etc]# ls hadoop [root@localhost etc]# cd hadoop/ [root@localhost hadoop]# ls capacity-scheduler.xml hadoop-policy.xml kms-log4j.properties ssl-client.xml.example configuration.xsl hdfs-site.xml kms-site.xml ssl-server.xml.example container-executor.cfg httpfs-env.sh log4j.properties yarn-env.cmd core-site.xml httpfs-log4j.properties mapred-env.cmd yarn-env.sh hadoop-env.cmd httpfs-signature.secret mapred-env.sh yarn-site.xml hadoop-env.sh httpfs-site.xml mapred-queues.xml.template hadoop-metrics2.properties kms-acls.xml mapred-site.xml.template hadoop-metrics.properties kms-env.sh slaves

4.2 修改hadoop配置文件

cd /software/hadoop-2.7.5/etc/hadoop

<configuration> <!-- 指定NameNode的HA高可用的zk地址 --> <property> <name>ha.zookeeper.quorum</name> <value>node01:2181,node02:2181,node03:2181</value> </property> <!-- 指定HDFS访问的域名地址 --> <property> <name>fs.defaultFS</name> <value>hdfs://ns</value> </property> <!-- 临时文件存储目录 --> <property> <name>hadoop.tmp.dir</name> <value>/software/hadoop-2.7.5/data/tmp</value> </property> <!-- 开启hdfs垃圾箱机制,指定垃圾箱中的文件七天之后就彻底删掉 单位为分钟 --> <property> <name>fs.trash.interval</name> <value>10080</value> </property> </configuration>

<configuration> <!-- 指定命名空间 --> <property> <name>dfs.nameservices</name> <value>ns</value> </property> <!-- 指定该命名空间下的两个机器作为我们的NameNode --> <property> <name>dfs.ha.namenodes.ns</name> <value>nn1,nn2</value> </property> <!-- 配置第一台服务器的namenode通信地址 --> <property> <name>dfs.namenode.rpc-address.ns.nn1</name> <value>node01:8020</value> </property> <!-- 配置第二台服务器的namenode通信地址 --> <property> <name>dfs.namenode.rpc-address.ns.nn2</name> <value>node02:8020</value> </property> <!-- 所有从节点之间相互通信端口地址 --> <property> <name>dfs.namenode.servicerpc-address.ns.nn1</name> <value>node01:8022</value> </property> <!-- 所有从节点之间相互通信端口地址 --> <property> <name>dfs.namenode.servicerpc-address.ns.nn2</name> <value>node02:8022</value> </property> <!-- 第一台服务器namenode的web访问地址 --> <property> <name>dfs.namenode.http-address.ns.nn1</name> <value>node01:50070</value> </property> <!-- 第二台服务器namenode的web访问地址 --> <property> <name>dfs.namenode.http-address.ns.nn2</name> <value>node02:50070</value> </property> <!-- journalNode的访问地址,注意这个地址一定要配置 --> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://node01:8485;node02:8485;node03:8485/ns1</value> </property> <!-- 指定故障自动恢复使用的哪个java类 --> <property> <name>dfs.client.failover.proxy.provider.ns</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <!-- 故障转移使用的哪种通信机制 --> <property> <name>dfs.ha.fencing.methods</name> <value>sshfence</value> </property> <!-- 指定通信使用的公钥 --> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/root/.ssh/id_rsa</value> </property> <!-- journalNode数据存放地址 --> <property> <name>dfs.journalnode.edits.dir</name> <value>/software/hadoop-2.7.5/data/dfs/jn</value> </property> <!-- 启用自动故障恢复功能 --> <property> <name>dfs.ha.automatic-failover.enabled</name> <value>true</value> </property> <!-- namenode产生的文件存放路径 --> <property> <name>dfs.namenode.name.dir</name> <value>file:///software/hadoop-2.7.5/data/dfs/nn/name</value> </property> <!-- edits产生的文件存放路径 --> <property> <name>dfs.namenode.edits.dir</name> <value>file:///software/hadoop-2.7.5/data/dfs/nn/edits</value> </property> <!-- dataNode文件存放路径 --> <property> <name>dfs.datanode.data.dir</name> <value>file:///software/hadoop-2.7.5/data/dfs/dn</value> </property> <!-- 关闭hdfs的文件权限 --> <property> <name>dfs.permissions</name> <value>false</value> </property> <!-- 指定block文件块的大小 --> <property> <name>dfs.blocksize</name> <value>134217728</value> </property> </configuration>

<configuration> <!-- Site specific YARN configuration properties --> <!-- 是否启用日志聚合.应用程序完成后,日志汇总收集每个容器的日志,这些日志移动到文件系统,例如HDFS. --> <!-- 用户可以通过配置"yarn.nodemanager.remote-app-log-dir"、"yarn.nodemanager.remote-app-log-dir-suffix"来确定日志移动到的位置 --> <!-- 用户可以通过应用程序时间服务器访问日志 --> <!-- 启用日志聚合功能,应用程序完成后,收集各个节点的日志到一起便于查看 --> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <!--开启resource manager HA,默认为false--> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property> <!-- 集群的Id,使用该值确保RM不会做为其它集群的active --> <property> <name>yarn.resourcemanager.cluster-id</name> <value>mycluster</value> </property> <!--配置resource manager 命名--> <property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>rm1,rm2</value> </property> <!-- 配置第一台机器的resourceManager --> <property> <name>yarn.resourcemanager.hostname.rm1</name> <value>node03</value> </property> <!-- 配置第二台机器的resourceManager --> <property> <name>yarn.resourcemanager.hostname.rm2</name> <value>node02</value> </property> <!-- 配置第一台机器的resourceManager通信地址 --> <property> <name>yarn.resourcemanager.address.rm1</name> <value>node03:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address.rm1</name> <value>node03:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address.rm1</name> <value>node03:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address.rm1</name> <value>node03:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address.rm1</name> <value>node03:8088</value> </property> <!-- 配置第二台机器的resourceManager通信地址 --> <property> <name>yarn.resourcemanager.address.rm2</name> <value>node02:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address.rm2</name> <value>node02:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address.rm2</name> <value>node02:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address.rm2</name> <value>node02:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address.rm2</name> <value>node02:8088</value> </property> <!--开启resourcemanager自动恢复功能--> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property> <!--在node3上配置rm1,在node2上配置rm2,注意:一般都喜欢把配置好的文件远程复制到其它机器上,但这个在YARN的另一个机器上一定要修改,其他机器上不配置此项--> <property> <name>yarn.resourcemanager.ha.id</name> <value>rm1</value> <description>If we want to launch more than one RM in single node, we need this configuration</description> </property> <!--用于持久存储的类。尝试开启--> <property> <name>yarn.resourcemanager.store.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value> </property> <property> <name>yarn.resourcemanager.zk-address</name> <value>node02:2181,node03:2181,node01:2181</value> <description>For multiple zk services, separate them with comma</description> </property> <!--开启resourcemanager故障自动切换,指定机器--> <property> <name>yarn.resourcemanager.ha.automatic-failover.enabled</name> <value>true</value> <description>Enable automatic failover; By default, it is enabled only when HA is enabled.</description> </property> <property> <name>yarn.client.failover-proxy-provider</name> <value>org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider</value> </property> <!-- 允许分配给一个任务最大的CPU核数,默认是8 --> <property> <name>yarn.nodemanager.resource.cpu-vcores</name> <value>4</value> </property> <!-- 每个节点可用内存,单位MB --> <property> <name>yarn.nodemanager.resource.memory-mb</name> <value>512</value> </property> <!-- 单个任务可申请最少内存,默认1024MB --> <property> <name>yarn.scheduler.minimum-allocation-mb</name> <value>512</value> </property> <!-- 单个任务可申请最大内存,默认8192MB --> <property> <name>yarn.scheduler.maximum-allocation-mb</name> <value>512</value> </property> <!--多长时间聚合删除一次日志 此处--> <property> <name>yarn.log-aggregation.retain-seconds</name> <value>2592000</value> <!--30 day--> </property> <!--时间在几秒钟内保留用户日志。只适用于如果日志聚合是禁用的--> <property> <name>yarn.nodemanager.log.retain-seconds</name> <value>604800</value> <!--7 day--> </property> <!--指定文件压缩类型用于压缩汇总日志--> <property> <name>yarn.nodemanager.log-aggregation.compression-type</name> <value>gz</value> </property> <!-- nodemanager本地文件存储目录--> <property> <name>yarn.nodemanager.local-dirs</name> <value>/software/hadoop-2.7.5/yarn/local</value> </property> <!-- resourceManager 保存最大的任务完成个数 --> <property> <name>yarn.resourcemanager.max-completed-applications</name> <value>1000</value> </property> <!-- 逗号隔开的服务列表,列表名称应该只包含a-zA-Z0-9_,不能以数字开始--> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!--rm失联后重新链接的时间--> <property> <name>yarn.resourcemanager.connect.retry-interval.ms</name> <value>2000</value> </property> </configuration>

<configuration> <!--指定运行mapreduce的环境是yarn --> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <!-- MapReduce JobHistory Server IPC host:port --> <property> <name>mapreduce.jobhistory.address</name> <value>node03:10020</value> </property> <!-- MapReduce JobHistory Server Web UI host:port --> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>node03:19888</value> </property> <!-- The directory where MapReduce stores control files.默认 ${hadoop.tmp.dir}/mapred/system --> <property> <name>mapreduce.jobtracker.system.dir</name> <value>/software/hadoop-2.7.5/data/system/jobtracker</value> </property> <!-- The amount of memory to request from the scheduler for each map task. 默认 1024--> <property> <name>mapreduce.map.memory.mb</name> <value>1024</value> </property> <!-- <property> <name>mapreduce.map.java.opts</name> <value>-Xmx1024m</value> </property> --> <!-- The amount of memory to request from the scheduler for each reduce task. 默认 1024--> <property> <name>mapreduce.reduce.memory.mb</name> <value>1024</value> </property> <!-- <property> <name>mapreduce.reduce.java.opts</name> <value>-Xmx2048m</value> </property> --> <!-- 用于存储文件的缓存内存的总数量,以兆字节为单位。默认情况下,分配给每个合并流1MB,给个合并流应该寻求最小化。默认值100--> <property> <name>mapreduce.task.io.sort.mb</name> <value>100</value> </property> <!-- <property> <name>mapreduce.jobtracker.handler.count</name> <value>25</value> </property>--> <!-- 整理文件时用于合并的流的数量。这决定了打开的文件句柄的数量。默认值10--> <property> <name>mapreduce.task.io.sort.factor</name> <value>10</value> </property> <!-- 默认的并行传输量由reduce在copy(shuffle)阶段。默认值5--> <property> <name>mapreduce.reduce.shuffle.parallelcopies</name> <value>25</value> </property> <property> <name>yarn.app.mapreduce.am.command-opts</name> <value>-Xmx1024m</value> </property> <!-- MR AppMaster所需的内存总量。默认值1536--> <property> <name>yarn.app.mapreduce.am.resource.mb</name> <value>1536</value> </property> <!-- MapReduce存储中间数据文件的本地目录。目录不存在则被忽略。默认值${hadoop.tmp.dir}/mapred/local--> <property> <name>mapreduce.cluster.local.dir</name> <value>/software/hadoop-2.7.5/data/system/local</value> </property> </configuration>

node01 node02 node03

export JAVA_HOME=/software/jdk1.8.0_241

[root@node01 software]# ls hadoop-2.7.5 jdk1.8.0_241 zookeeper-3.4.9 zookeeper-3.4.9.tar.gz [root@node01 software]# scp -r hadoop-2.7.5/ node02:$PWD root@node02's password: (省略.....) [root@node01 software]# scp -r hadoop-2.7.5/ node03:$PWD root@node03's password: (省略.....)

mkdir -p /software/hadoop-2.7.5/data/dfs/nn/name mkdir -p /software/hadoop-2.7.5/data/dfs/nn/edits mkdir -p /software/hadoop-2.7.5/data/dfs/nn/name mkdir -p /software/hadoop-2.7.5/data/dfs/nn/edits

<!-- <property> <name>yarn.resourcemanager.ha.id</name> <value>rm1</value> <description>If we want to launch more than one RM in single node, we need this configuration</description> </property> -->

<property> <name>yarn.resourcemanager.ha.id</name> <value>rm2</value> <description>If we want to launch more than one RM in single node, we need this configuration</description> </property>

<property> <name>yarn.resourcemanager.ha.id</name> <value>rm1</value> <description>If we want to launch more than one RM in single node, we need this configuration</description> </property>5、启动hadoop

5.1 启动HDFS过程

bin/hdfs zkfc -formatZK sbin/hadoop-daemons.sh start journalnode bin/hdfs namenode -format bin/hdfs namenode -initializeSharedEdits -force sbin/start-dfs.sh

[root@node01 hadoop-2.7.5]# sbin/hadoop-daemons.sh start journalnode The authenticity of host 'node01 (192.168.24.137)' can't be established. ECDSA key fingerprint is SHA256:GzI3JXtwr1thv7B0pdcvYQSpd98Nj1PkjHnvABgHFKI. ECDSA key fingerprint is MD5:00:00:7b:46:99:5e:ff:f2:54:84:19:25:2c:63:0a:9e. Are you sure you want to continue connecting (yes/no)? root@node02's password: root@node03's password: Please type 'yes' or 'no': node01: Warning: Permanently added 'node01' (ECDSA) to the list of known hosts. root@node01's password: node02: starting journalnode, logging to /software/hadoop-2.7.5/logs/hadoop-root-journalnode-node02.out root@node03's password: node03: Permission denied, please try again. root@node01's password: node01: Permission denied, please try again.

[root@node02 software]# cd hadoop-2.7.5/ [root@node02 hadoop-2.7.5]# bin/hdfs namenode -bootstrapStandby (省略....) [root@node02 hadoop-2.7.5]# sbin/hadoop-daemon.sh start namenode (省略....)5.2 启动yarn过程

[root@node03 software]# cd hadoop-2.7.5/ [root@node03 hadoop-2.7.5]# sbin/start-yarn.sh

[root@node02 hadoop-2.7.5]# sbin/start-yarn.sh starting yarn daemons resourcemanager running as process 11740. Stop it first. The authenticity of host 'node02 (192.168.24.138)' can't be established. ECDSA key fingerprint is SHA256:GzI3JXtwr1thv7B0pdcvYQSpd98Nj1PkjHnvABgHFKI. ECDSA key fingerprint is MD5:00:00:7b:46:99:5e:ff:f2:54:84:19:25:2c:63:0a:9e. Are you sure you want to continue connecting (yes/no)? node01: nodemanager running as process 15655. Stop it first. node03: nodemanager running as process 13357. Stop it first.

#进程已经在运行中了,先执行stop-all.sh下,然后再执行start-all.sh [root@node02 sbin]# pwd /software/hadoop-2.7.5/sbin [root@node02 sbin]# ./stop-all.sh [root@node02 sbin]# ./start-all.sh

./stop-yarn.sh ./stop-dfs.sh ./start-yarn.sh ./start-dfs.sh

[root@node03 sbin]# ./start-dfs.sh Starting namenodes on [node01 node02] node02: starting namenode, logging to /software/hadoop-2.7.5/logs/hadoop-root-namenode-node02.out node01: starting namenode, logging to /software/hadoop-2.7.5/logs/hadoop-root-namenode-node01.out node02: starting datanode, logging to /software/hadoop-2.7.5/logs/hadoop-root-datanode-node02.out node01: starting datanode, logging to /software/hadoop-2.7.5/logs/hadoop-root-datanode-node01.out node03: starting datanode, logging to /software/hadoop-2.7.5/logs/hadoop-root-datanode-node03.out Starting journal nodes [node01 node02 node03] node02: starting journalnode, logging to /software/hadoop-2.7.5/logs/hadoop-root-journalnode-node02.out node01: starting journalnode, logging to /software/hadoop-2.7.5/logs/hadoop-root-journalnode-node01.out node03: starting journalnode, logging to /software/hadoop-2.7.5/logs/hadoop-root-journalnode-node03.out Starting ZK Failover Controllers on NN hosts [node01 node02] node01: starting zkfc, logging to /software/hadoop-2.7.5/logs/hadoop-root-zkfc-node01.out node02: starting zkfc, logging to /software/hadoop-2.7.5/logs/hadoop-root-zkfc-node02.out [root@node03 sbin]# ./start-yarn.sh starting yarn daemons starting resourcemanager, logging to /software/hadoop-2.7.5/logs/yarn-root-resourcemanager-node03.out node01: starting nodemanager, logging to /software/hadoop-2.7.5/logs/yarn-root-nodemanager-node01.out node02: starting nodemanager, logging to /software/hadoop-2.7.5/logs/yarn-root-nodemanager-node02.out node03: starting nodemanager, logging to /software/hadoop-2.7.5/logs/yarn-root-nodemanager-node03.out

[root@node01 hadoop-2.7.5]# jps 8083 NodeManager 8531 DFSZKFailoverController 8404 JournalNode 9432 Jps 1467 QuorumPeerMain 8235 NameNode

[root@node02 sbin]# jps 7024 NodeManager 7472 DFSZKFailoverController 7345 JournalNode 7176 NameNode 8216 ResourceManager 8793 Jps 1468 QuorumPeerMain

[root@node03 hadoop-2.7.5]# jps 5349 NodeManager 5238 ResourceManager 6487 JobHistoryServer 6647 Jps 5997 JournalNode

[root@node03 hadoop-2.7.5]# ./sbin/stop-dfs.sh Stopping namenodes on [node01 node02] node02: no namenode to stop node01: no namenode to stop node02: no datanode to stop node01: no datanode to stop node03: no datanode to stop Stopping journal nodes [node01 node02 node03] node02: no journalnode to stop node01: no journalnode to stop node03: no journalnode to stop Stopping ZK Failover Controllers on NN hosts [node01 node02] node02: no zkfc to stop node01: no zkfc to stop [root@node03 hadoop-2.7.5]# ./sbin/stop-yarn.sh stopping yarn daemons stopping resourcemanager node01: stopping nodemanager node02: stopping nodemanager node03: stopping nodemanager no proxyserver to stop

[root@node01 hadoop-2.7.5]# rm -rf data/dfs/dn [root@node01 hadoop-2.7.5]# sbin/start-dfs.sh Starting namenodes on [node01 node02] node02: starting namenode, logging to /software/hadoop-2.7.5/logs/hadoop-root-namenode-node02.out node01: starting namenode, logging to /software/hadoop-2.7.5/logs/hadoop-root-namenode-node01.out node02: starting datanode, logging to /software/hadoop-2.7.5/logs/hadoop-root-datanode-node02.out node03: starting datanode, logging to /software/hadoop-2.7.5/logs/hadoop-root-datanode-node03.out node01: starting datanode, logging to /software/hadoop-2.7.5/logs/hadoop-root-datanode-node01.out Starting journal nodes [node01 node02 node03] node02: starting journalnode, logging to /software/hadoop-2.7.5/logs/hadoop-root-journalnode-node02.out node03: starting journalnode, logging to /software/hadoop-2.7.5/logs/hadoop-root-journalnode-node03.out node01: starting journalnode, logging to /software/hadoop-2.7.5/logs/hadoop-root-journalnode-node01.out Starting ZK Failover Controllers on NN hosts [node01 node02] node02: starting zkfc, logging to /software/hadoop-2.7.5/logs/hadoop-root-zkfc-node02.out node01: starting zkfc, logging to /software/hadoop-2.7.5/logs/hadoop-root-zkfc-node01.out 您在 /var/spool/mail/root 中有新邮件 [root@node01 hadoop-2.7.5]# sbin/start-yarn.sh starting yarn daemons starting resourcemanager, logging to /software/hadoop-2.7.5/logs/yarn-root-resourcemanager-node01.out node02: starting nodemanager, logging to /software/hadoop-2.7.5/logs/yarn-root-nodemanager-node02.out node03: starting nodemanager, logging to /software/hadoop-2.7.5/logs/yarn-root-nodemanager-node03.out node01: starting nodemanager, logging to /software/hadoop-2.7.5/logs/yarn-root-nodemanager-node01.out

[root@node01 dfs]# jps 10561 NodeManager 9955 DataNode 10147 JournalNode 9849 NameNode 10762 Jps 1467 QuorumPeerMain 10319 DFSZKFailoverController

[root@node02 hadoop-2.7.5]# jps 9744 NodeManager 9618 DFSZKFailoverController 9988 Jps 9367 NameNode 8216 ResourceManager 9514 JournalNode 1468 QuorumPeerMain 9439 DataNode

[root@node03 hadoop-2.7.5]# jps 7953 Jps 7683 JournalNode 6487 JobHistoryServer 7591 DataNode 7784 NodeManager5.3 查看resourceManager状态

[root@node03 hadoop-2.7.5]# bin/yarn rmadmin -getServiceState rm1 active

[root@node02 hadoop-2.7.5]# bin/yarn rmadmin -getServiceState rm2 standby5.4 启动jobHistory

[root@node03 hadoop-2.7.5]# sbin/mr-jobhistory-daemon.sh start historyserver starting historyserver, logging to /software/hadoop-2.7.5/logs/mapred-root-historyserver-node03.out5.5 hdfs状态查看

5.6 yarn集群访问查看

5.7 历史任务浏览界面

6.hadoop命令行

[root@node01 bin]# ./hdfs dfs -rm /a.txt 20/06/12 14:33:30 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 10080 minutes, Emptier interval = 0 minutes. 20/06/12 14:33:30 INFO fs.TrashPolicyDefault: Moved: 'hdfs://ns/a.txt' to trash at: hdfs://ns/user/root/.Trash/Current/a.txt Moved: 'hdfs://ns/a.txt' to trash at: hdfs://ns/user/root/.Trash/Current

[root@node01 bin]# ./hdfs dfs -mkdir /dir

[root@node01 bin]# ./hdfs dfs -put /software/a.txt /dir

本网页所有视频内容由 imoviebox边看边下-网页视频下载, iurlBox网页地址收藏管理器 下载并得到。

ImovieBox网页视频下载器 下载地址: ImovieBox网页视频下载器-最新版本下载

本文章由: imapbox邮箱云存储,邮箱网盘,ImageBox 图片批量下载器,网页图片批量下载专家,网页图片批量下载器,获取到文章图片,imoviebox网页视频批量下载器,下载视频内容,为您提供.

阅读和此文章类似的: 全球云计算

官方软件产品操作指南 (170)

官方软件产品操作指南 (170)