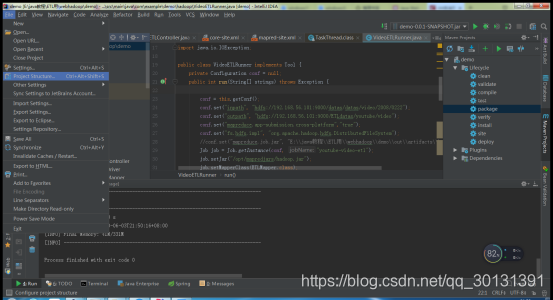

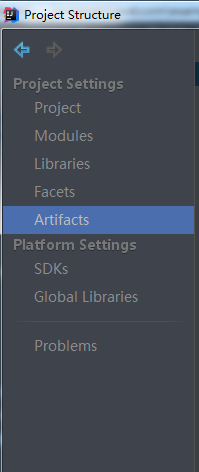

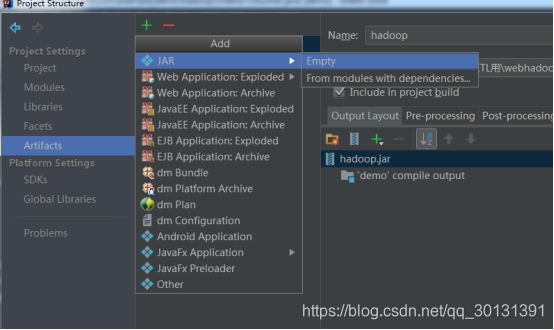

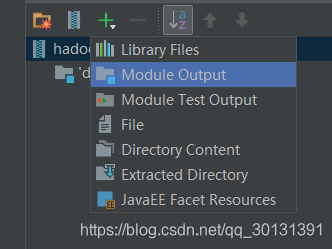

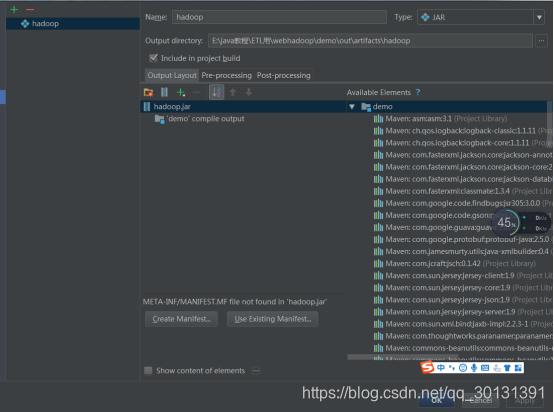

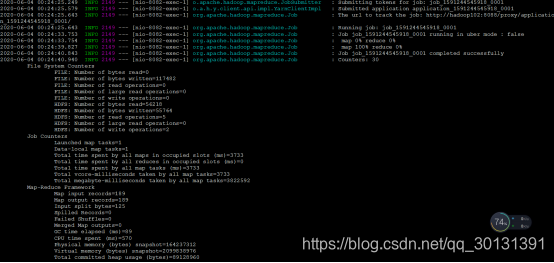

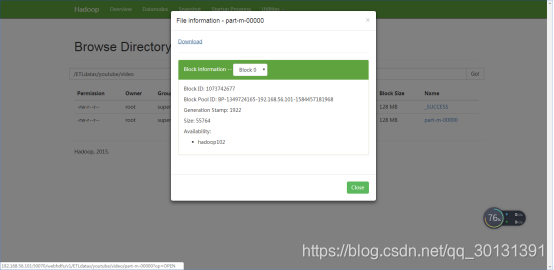

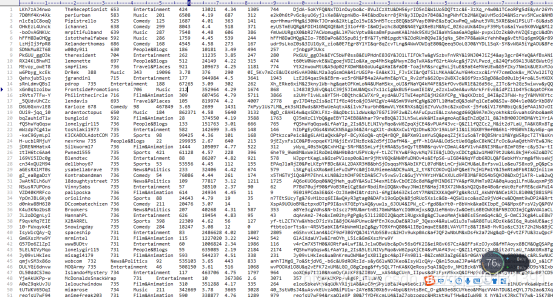

SpringBoot在整合Hadoop做远程任务提交时尤其是做map和reduce计算任务时,会出现找不到Mappe类与Reducer类的情况,以下为解决方案: 如下图打开IDEA的project structure 点击Artifacts 点击+号,点击jar,点击Empty,然后点击OutputLayout下的+号,点击ModeuleOutput 目标是将HDFS上的video清单进行数据清洗(数据来源为尚硅谷的Hive实战的youtube项目里的数据)从集群上拉下core-site.xml、hdfs-site.xml、mapred-site.xml、yarn-site.xml文件放在Springboot项目的Resource目录下。 注意上面用的是job.setJar(“/opt/mapredjars/hadoop.jar”);不用setJarByClass了,因为会报错Mapper找不到,setJar后面括号的目录就是上面根据Output directory找到该目录的jar包然后放在虚拟机上时的地址。 当本地client提交job资源时提交的主要是job.xml、filesplit、job.jar,其中job.jar主要包含的就是Mapper与Reducer程序,之前我一直使用的是job.setJarByClass ,然后Springboot提交任务后一直报错说ETLMapper找不到,显然是因为提交的job.jar包找不到我自定义的Mapper(IDEA远程调试报错找不到是因为程序本身还没打成jar包所以找不到jar包但和这个报错两回事),我尝试过将setJar后的jar包目录改为打包后Springboot的jar包目录但失败了,后来通过对build的jar包结构与package后jar包结构的对比分析,猜想是因为build时IDEA产生的jar包里的结构与开发时的结构相同而package后IDEA产生的jar包结构与开发时的不同,从而导致无法加载到package后的jar包里的Mapper,后来使用build产生的jar包后果然解决了问题。虽然解决了问题但并不能完全证明我的猜想是对的,为何Package的jar包无法被hadoop使用其深层次的原因还有待分析。

SpringBoot远程提交MapReduce任务给Hadoop集群踩坑

引言

IDEA远程调试请参考:https://blog.csdn.net/qq_19648191/article/details/56684268IDEA的Project Structure配置

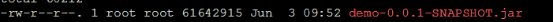

这里有个重点,项目build后根据Output directory找到该目录的jar包,我们要把这个jar包放到运行的linux虚拟机上(放在windows上跑也可以,我是在linux虚拟机上跑的),我创建了mapredjars放在/opt/mapredjars里。SpringBoot代码

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="https://maven.apache.org/POM/4.0.0" xmlns:xsi="https://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="https://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>1.5.9.RELEASE</version> <relativePath/> <!-- lookup parent from repository --> </parent> <groupId>com.example</groupId> <artifactId>demo</artifactId> <version>0.0.1-SNAPSHOT</version> <name>demo</name> <description>Demo project for Spring Boot</description> <properties> <java.version>1.8</java.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> <version>1.5.9.RELEASE</version> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-devtools</artifactId> <scope>runtime</scope> <optional>true</optional> </dependency> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> <optional>true</optional> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> <exclusions> <exclusion> <groupId>org.junit.vintage</groupId> <artifactId>junit-vintage-engine</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>RELEASE</version> </dependency> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-core</artifactId> <version>2.8.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.7.2</version> <exclusions> <exclusion> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> </exclusion> <exclusion> <groupId>log4j</groupId> <artifactId>log4j</artifactId> </exclusion> <exclusion> <groupId>javax.servlet</groupId> <artifactId>servlet-api</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.7.2</version> <exclusions> <exclusion> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> </exclusion> <exclusion> <groupId>log4j</groupId> <artifactId>log4j</artifactId> </exclusion> <exclusion> <groupId>javax.servlet</groupId> <artifactId>servlet-api</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.7.2</version> </dependency> </dependencies> <build> <plugins> <plugin> <artifactId>maven-compiler-plugin</artifactId> <version>2.3.2</version> <configuration> <source>1.8</source> <target>1.8</target> </configuration> </plugin> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> <configuration> <fork>true</fork> <mainClass>com.example.demo.DemoApplication</mainClass> </configuration> <executions> <execution> <goals> <goal>repackage</goal> </goals> </execution> </executions> </plugin> </plugins> </build> </project>

server.port=8082

package com.example.demo.hadoop; import org.apache.commons.lang.StringUtils; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; public class ETLMapper extends Mapper<LongWritable,Text,NullWritable,Text>{ Text text = new Text(); @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { String etlString = ETLUtil.getETLString(value.toString()); if(StringUtils.isBlank(etlString)) return; text.set(etlString); context.write(NullWritable.get(), text); } }

package com.example.demo.hadoop; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.util.Tool; import java.io.IOException; public class VideoETLRunner implements Tool { private Configuration conf = null; public int run(String[] strings) throws Exception { conf = this.getConf(); conf.set("inpath", "hdfs://192.168.56.101:9000/datas/datas/video/2008/0222"); conf.set("outpath", "hdfs://192.168.56.101:9000/ETLdatas/youtube/video"); conf.set("mapreduce.app-submission.cross-platform","true"); conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem"); //conf.set("mapreduce.job.jar", "E:\java教程\ETL用\webhadoop\demo\out\artifacts\hadoop\hadoop.jar"); Job job = Job.getInstance(conf, "youtube-video-etl"); job.setJar("/opt/mapredjars/hadoop.jar"); job.setMapperClass(ETLMapper.class); job.setMapOutputKeyClass(NullWritable.class); job.setMapOutputValueClass(Text.class); job.setNumReduceTasks(0); this.initJobInputPath(job); this.initJobOutputPath(job); return job.waitForCompletion(true) ? 0 : 1; } public void setConf(Configuration conf) { this.conf = conf; } public Configuration getConf() { return this.conf; } private void initJobInputPath(Job job) throws IOException { Configuration conf = job.getConfiguration(); String inPathString = conf.get("inpath"); FileSystem fs = FileSystem.get(conf); Path inPath = new Path(inPathString); if(fs.exists(inPath)){ FileInputFormat.addInputPath(job, inPath); }else{ throw new RuntimeException("HDFS 中该文件目录不存在:" + inPathString); } } private void initJobOutputPath(Job job) throws IOException { Configuration conf = job.getConfiguration(); String outPathString = conf.get("outpath"); FileSystem fs = FileSystem.get(conf); Path outPath = new Path(outPathString); if(fs.exists(outPath)){ fs.delete(outPath, true); } FileOutputFormat.setOutputPath(job, outPath); } }

测试

package com.example.demo.hadoop; import org.apache.hadoop.util.ToolRunner; import org.springframework.stereotype.Controller; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.ResponseBody; @Controller public class ETLController { @ResponseBody @RequestMapping("/test") public String Test(){ try { int resultCode = ToolRunner.run(new VideoETLRunner(),null); if (resultCode == 0) { return "Success!"; } else { return "Fail!"; } } catch (Exception e) { e.printStackTrace(); System.exit(1); } return "Fail!"; } } 测试

MR计算完成后浏览器返回success提示

下载文件查看

成功!总结

本网页所有视频内容由 imoviebox边看边下-网页视频下载, iurlBox网页地址收藏管理器 下载并得到。

ImovieBox网页视频下载器 下载地址: ImovieBox网页视频下载器-最新版本下载

本文章由: imapbox邮箱云存储,邮箱网盘,ImageBox 图片批量下载器,网页图片批量下载专家,网页图片批量下载器,获取到文章图片,imoviebox网页视频批量下载器,下载视频内容,为您提供.

阅读和此文章类似的: 全球云计算

官方软件产品操作指南 (170)

官方软件产品操作指南 (170)