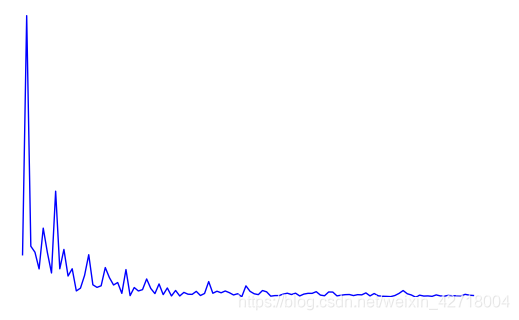

今天一个音乐可视化的程序,什么是音乐可视化,就像下面这样 首先是音乐读取的部分,这里注意将你的音乐转换成wav格式,MP3我试了下不行 然后是初始化视图 然后是定义一个视图的更新函数 最后执行 你可以去原版的博客看下,原版程序应该是显示时域和频域两个,我这里把时域去掉了,然后加了点颜色变化效果 这里是另外一个可视化样式 两个样式分别是这样色儿的

用python就是库多,这么多的库,你的什么需求,都有轮子用啊

没错,就是这个东西,这个似乎有一个名词来称呼的吧,什么名词呢想不到了,就叫她音乐可视化吧。

基本思路是这样,这个东西应该就是音乐中不同频率的分布情况,那么如何得到一首歌的频率分布情况呢,能想到的就是傅里叶变换了,由时域得到频域,然后再用matplotlib库把频域的信息实时更新到画面上。思路就是这么个思路,卧槽那应该如何实现啊,什么傅里叶,什么时域频域,这似乎很麻烦,还好,谁让python有那么多库可以用呢,快速认识一个库,那就找找有没有其他人的实现案例,现成的用起来(这次实现参考的这篇博客的实现方法他实现了很多样式的可视化展示,我拿了其中两个样式来用)

导入库import numpy as np import pyaudio from pydub import AudioSegment, effects import matplotlib.pyplot as plt from matplotlib.animation import FuncAnimation p = pyaudio.PyAudio() sound = AudioSegment.from_file(file='Free-Converter.com-a1-86040504.wav') left = sound.split_to_mono()[0] fs = left.frame_rate size = len(left.get_array_of_samples()) channels = left.channels stream = p.open( format=p.get_format_from_width(left.sample_width, ), channels=channels, rate=fs, # input=True, output=True, ) stream.start_stream() fig = plt.figure() ax1 = fig.subplots() ax1.set_ylim(0, 2) ax1.set_axis_off() window = int(0.02 * fs) # 20ms g_windows = window // 8 f = np.linspace(20, 20 * 1000, g_windows) t = np.linspace(0, 20, window) lf1, = ax1.plot(f, np.zeros(g_windows), lw=1) lf1.set_antialiased(True) color_grade = ['black','blue','yellow','red'] def update(frames): if stream.is_active(): slice = left.get_sample_slice(frames, frames + window) data = slice.raw_data stream.write(data) y = np.array(slice.get_array_of_samples()) / 30000 # 归一化 yft = np.abs(np.fft.fft(y)) / (g_windows) # print('max',max(yft[:g_windows]),'min',min(yft[:g_windows])) # print(max(yft[:g_windows]) - min(yft[:g_windows])) # max = # min = min(yft[:g_windows]) grade = int(max(yft[:g_windows]) - min(yft[:g_windows])) if 0 <= grade < len(color_grade): lf1.set_color(color_grade[grade]) lf1.set_ydata(yft[:g_windows]) # lf2.set_ydata(y) return lf1, # return lf1, lf2, ani = FuncAnimation(fig, update, frames=range(0, size, window), interval=0, blit=True) plt.show()

对了,还需要下载ffmpeg,解压后,将bin添加到系统环境变量

这里是所有源码#coding=utf-8 import numpy as np import pyaudio from pydub import AudioSegment, effects import matplotlib.pyplot as plt from matplotlib.animation import FuncAnimation #------------------------------------两条线模式 p = pyaudio.PyAudio() sound = AudioSegment.from_file(file='Free-Converter.com-a1-86040504.wav') left = sound.split_to_mono()[0] fs = left.frame_rate size = len(left.get_array_of_samples()) channels = left.channels stream = p.open( format=p.get_format_from_width(left.sample_width, ), channels=channels, rate=fs, # input=True, output=True, ) stream.start_stream() fig = plt.figure() # ax1, ax2 = fig.subplots(2, 1) ax1 = fig.subplots() ax1.set_ylim(0, 2) # ax2.set_ylim(-1.5, 1.5) ax1.set_axis_off() # ax2.set_axis_off() window = int(0.02 * fs) # 20ms g_windows = window // 8 f = np.linspace(20, 20 * 1000, g_windows) t = np.linspace(0, 20, window) lf1, = ax1.plot(f, np.zeros(g_windows), lw=1) lf1.set_antialiased(True) # lf1.set_fillstyle('left') # lf1.set_drawstyle('steps-pre') # lf2, = ax2.plot(t, np.zeros(window), lw=1) color_grade = ['black','blue','yellow','red'] def update(frames): if stream.is_active(): slice = left.get_sample_slice(frames, frames + window) data = slice.raw_data stream.write(data) y = np.array(slice.get_array_of_samples()) / 30000 # 归一化 yft = np.abs(np.fft.fft(y)) / (g_windows) # print('max',max(yft[:g_windows]),'min',min(yft[:g_windows])) # print(max(yft[:g_windows]) - min(yft[:g_windows])) # max = # min = min(yft[:g_windows]) grade = int(max(yft[:g_windows]) - min(yft[:g_windows])) if 0 <= grade < len(color_grade): lf1.set_color(color_grade[grade]) lf1.set_ydata(yft[:g_windows]) # lf2.set_ydata(y) return lf1, # return lf1, lf2, ani = FuncAnimation(fig, update, frames=range(0, size, window), interval=0, blit=True) plt.show() import matplotlib.pyplot as plt from scipy.signal import detrend # from scipy.fftpack import fft import numpy as np import pyaudio from _tkinter import TclError import struct import wave # import librosa from pydub import AudioSegment from matplotlib.animation import FuncAnimation chunk = 600 p = pyaudio.PyAudio() # sound = AudioSegment.from_file(file='../Music/xxx.mp3') # rdata = sound.get_array_of_samples() wf = wave.open('Free-Converter.com-a1-86040504.wav') stream = p.open( format=8, channels=wf.getnchannels(), rate=wf.getframerate(), # input=True, output=True, # frames_per_buffer=chunk ) fig = plt.figure() ax = fig.gca() ax.set_ylim(0, 1) ax.set_axis_off() lf = ax.stem(np.linspace(20, 20000, chunk), np.zeros(chunk), basefmt=':', use_line_collection=True) # lf.markerline.set_color([0.8, 0.2, 0, 0.5]) lf.markerline.set_color([0.3,0.4,0.5,0.6]) # lf.markerline.set_color('yellow') def init(): stream.start_stream() return lf def update(frame): if stream.is_active(): data = wf.readframes(chunk) stream.write(data) data_int = struct.unpack(str(chunk * 4) + 'B', data) y_detrend = detrend(data_int) yft = np.abs(np.fft.fft(y_detrend)) y_vals = yft[:chunk] / (chunk * chunk) ind = np.where(y_vals > (np.max(y_vals) + np.min(y_vals)) / 2) y_vals[ind[0]] *= 4 # print(y_vals) lf.markerline.set_ydata(y_vals) return lf ani = FuncAnimation(fig, update, frames=None, init_func=init, interval=0, blit=True) plt.show()

本网页所有视频内容由 imoviebox边看边下-网页视频下载, iurlBox网页地址收藏管理器 下载并得到。

ImovieBox网页视频下载器 下载地址: ImovieBox网页视频下载器-最新版本下载

本文章由: imapbox邮箱云存储,邮箱网盘,ImageBox 图片批量下载器,网页图片批量下载专家,网页图片批量下载器,获取到文章图片,imoviebox网页视频批量下载器,下载视频内容,为您提供.

阅读和此文章类似的: 全球云计算

官方软件产品操作指南 (170)

官方软件产品操作指南 (170)