以下是RNG S8 8强赛失败后,官微发表道歉微博下一级评论 rng_comment.txt(数据太大只能上传到百度云): 1、在kafak中创建rng_comment主题,设置2个分区2个副本 /export/servers/kafka_2.11-1.0.0/bin/kafka-topics.sh –create –zookeeper hadoop01:2181 –replication-factor 2 –partitions 2 –topic rng_comment 1.2、数据预处理,把空行过滤掉 import org.apache.spark.{SparkConf, SparkContext} 1.3、请把给出的文件写入到kafka中,根据数据id进行分区,id为奇数的发送到一个分区中,偶数的发送到另一个分区 1.4、使用Spark Streaming对接kafka之后进行计算 数据库表: CREATE DATABASE rng_comment;

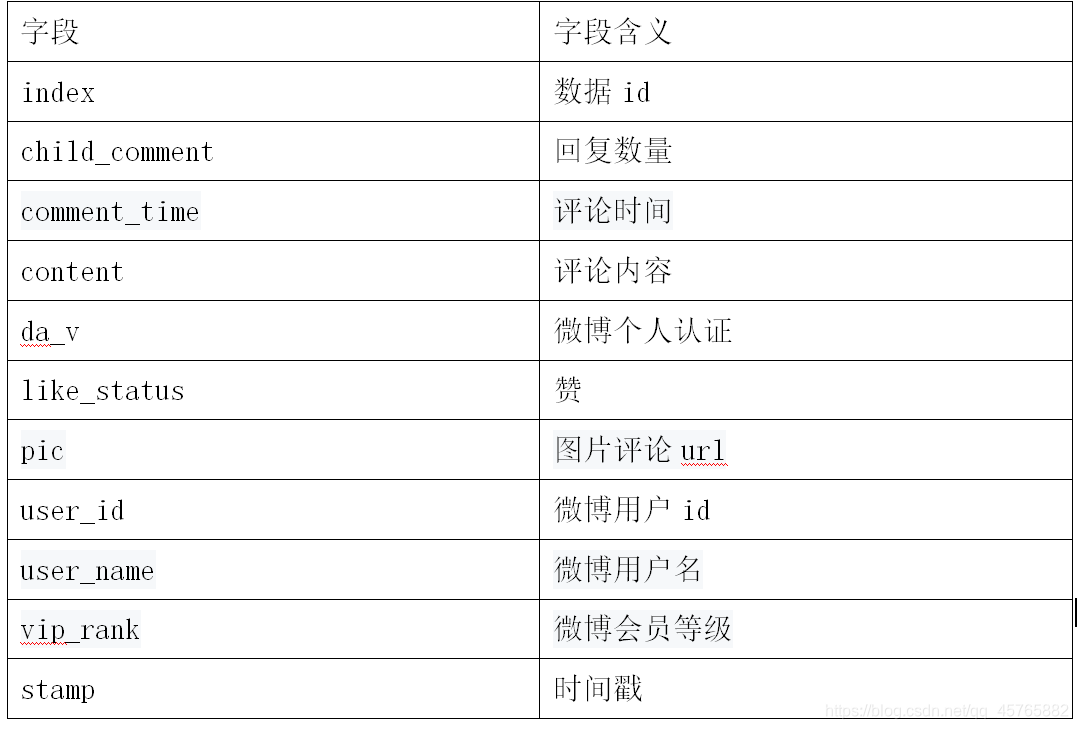

数据说明:

rng_comment.txt文件中的数据

链接: https://pan.baidu.com/s/164mBxiccbkLdFHF5BcSjww 提取码: vykt

import org.apache.spark.rdd.RDD

object day2 {

//1.2、数据预处理,把空行过滤掉

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf = new SparkConf().setMaster(“local[*]”).setAppName(“day2”)

val sc = new SparkContext(sparkConf)

sc.setLogLevel(“WARN”)

val fileRDD: RDD[String] = sc.textFile(“F:第四学期的大数据资料day02四月份资料第二周day034.14号练习题rng_comment.txt”)

fileRDD.filter { x => var datas = x.split(“t”); datas.length == 11 }.coalesce(1)

.saveAsTextFile(“F:第四学期的大数据资料day02四月份资料第二周day034.14号练习题output20200414”)

sc.stop()

}

}import org.apache.kafka.clients.producer.KafkaProducer; import org.apache.kafka.clients.producer.ProducerRecord; import java.io.*; import java.util.Properties; public class Producer03 { public static void main(String[] args) throws IOException { //配置 kafka 集群 Properties props = new Properties(); props.put("bootstrap.servers", "hadoop01:9092,hadoop02:9092,hadoop03:9092"); props.put("acks", "all"); props.put("retries", 1); props.put("batch.size", 16384); props.put("linger.ms", 1); props.put("buffer.memory", 33554432); // kafka 数据中 key-value 的序列化 props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); KafkaProducer<String, String> kafkaProducer = new KafkaProducer<>(props); File file = new File("F:\第四学期的大数据资料\day02四月份资料\第二周\day03\4.14号练习题\output20200414\part-00000"); BufferedReader bufferedReader = new BufferedReader(new FileReader(file)); String line = null; int partition = 0; while ((line = bufferedReader.readLine()) != null) { try { if (Integer.parseInt(line.split("t")[0]) % 2 == 0) { partition = 0; } else { partition = 1; } } catch (NumberFormatException e) { continue; } kafkaProducer.send(new ProducerRecord<String, String>("rng_comment", partition, String.valueOf(partition), line)); } bufferedReader.close(); kafkaProducer.close(); } }

在mysql中创建一个数据库rng_comment

在数据库rng_comment创建vip_rank表,字段为数据的所有字段

在数据库rng_comment创建like_status表,字段为数据的所有字段

在数据库rng_comment创建count_conmment表,字段为 时间,条数

1.5.1、查询出微博会员等级为5的用户,并把这些数据写入到mysql数据库中的vip_rank表中

1.5.2、查询出评论赞的个数在10个以上的数据,并写入到mysql数据库中的like_status表中

1.5.3、分别计算出2018/10/20 ,2018/10/21,2018/10/22,2018/10/23这四天每一天的评论数是多少,并写入到mysql数据库中的count_conmment表中import java.sql.{Connection, DriverManager, PreparedStatement} import java.text.SimpleDateFormat import org.apache.kafka.clients.consumer.ConsumerRecord import org.apache.kafka.common.serialization.StringDeserializer import org.apache.spark.{SparkConf, SparkContext} import org.apache.spark.rdd.RDD import org.apache.spark.streaming.{Seconds, StreamingContext} import org.apache.spark.streaming.dstream.{DStream, InputDStream} import org.apache.spark.streaming.kafka010.{ConsumerStrategies, KafkaUtils, LocationStrategies} object SparkStreaming { def main(args: Array[String]): Unit = { val sparkConf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("SparkStreaming") val ssc = new StreamingContext(sparkConf, Seconds(3)) // 3.设置Kafka参数 val kafkaParams = Map[String, Object]( "bootstrap.servers" -> "hadoop01:9092,hadoop02:9092,hadoop03:9092", "key.deserializer" -> classOf[StringDeserializer], "value.deserializer" -> classOf[StringDeserializer], "group.id" -> "SparkKafkaDemo", "auto.offset.reset" -> "latest", //false表示关闭自动提交.由spark帮你提交到Checkpoint或程序员手动维护 "enable.auto.commit" -> (false: java.lang.Boolean) ) // 4.设置Topic var topics = Array("rng_comment") val recordDStream: InputDStream[ConsumerRecord[String, String]] = KafkaUtils.createDirectStream[String, String](ssc, LocationStrategies.PreferConsistent, //位置策略,源码强烈推荐使用该策略,会让Spark的Executor和Kafka的Broker均匀对应 ConsumerStrategies.Subscribe[String, String](topics, kafkaParams)) //消费策略,源码强烈推荐使用该策略 val resultDStream: DStream[Array[String]] = recordDStream.map(_.value()).map(_.split("t")).cache() // 1.查询出微博会员等级为5的用户,并把这些数据写入到mysql数据库中的vip_rank表中 resultDStream.filter(_ (9) == "5") foreachRDD { rdd: RDD[Array[String]] => { rdd.foreachPartition { iter: Iterator[Array[String]] => { Class.forName( "com.mysql.jdbc.Driver") val connection: Connection = DriverManager.getConnection("jdbc:mysql://localhost:3306/rng_comment?characterEncoding=UTF-8", "root", "root") val sql = "insert into vip_rank values (?,?,?,?,?,?,?,?,?,?,?)" iter.foreach { line: Array[String] => { val statement: PreparedStatement = connection.prepareStatement(sql) statement.setInt(1, line(0).toInt); statement.setInt(2, line(1).toInt); statement.setString(3, line(2)); statement.setString(4, line(3)); statement.setString(5, line(4)); statement.setString(6, line(5)); statement.setString(7, line(6)); statement.setString(8, line(7)); statement.setString(9, line(8)); statement.setInt(10, line(9).toInt); statement.setString(11, line(10)); statement.executeUpdate() statement.close() } } connection.close() } } } } // 1.5.2、查询出评论赞的个数在10个以上的数据,并写入到mysql数据库中的like_status表中 resultDStream.filter(_ (5).toInt > 10).foreachRDD { rdd: RDD[Array[String]] => { rdd.foreachPartition { iter: Iterator[Array[String]] => { Class.forName("com.mysql.jdbc.Driver") val connection: Connection = DriverManager.getConnection( "jdbc:mysql://localhost:3306/rng_comment?characterEncoding=UTF-8", "root", "root") var sql = "insert into like_status values (?,?,?,?,?,?,?,?,?,?,?)" iter.foreach { line: Array[String] => { val statement: PreparedStatement = connection.prepareStatement(sql) statement.setInt(1, line(0).toInt); statement.setInt(2, line(1).toInt); statement.setString(3, line(2)); statement.setString(4, line(3)); statement.setString(5, line(4)); statement.setString(6, line(5)); statement.setString(7, line(6)); statement.setString(8, line(7)); statement.setString(9, line(8)); statement.setInt(10, line(9).toInt); statement.setString(11, line(10)); statement.executeUpdate() statement.close() } } connection.close() } } } } // 3.分别计算出2018/10/20 ,2018/10/21,2018/10/22,2018/10/23这四天每一天的评论数是多少,并写入到mysql数据库中的count_conmment表中 val dateFormat1 = new SimpleDateFormat("yyyy/MM/dd HH:mm") val dateFormat2 = new SimpleDateFormat("yyyy/MM/dd") val value: DStream[Array[String]] = resultDStream.filter { date:Array[String] => { val str: String = dateFormat2.format(dateFormat1.parse(date(2))) if ("2018/10/20".equals(str) || "2018/10/21".equals(str) || "2018/10/22".equals(str) || "2018/10/23".equals(str)) { true } else { false } } } value.foreachRDD { rdd: RDD[Array[String]] => { rdd.groupBy(x => dateFormat2.format(dateFormat1.parse(x(2)))).map(x => x._1 -> x._2.size).foreachPartition { iter: Iterator[(String, Int)] => { Class.forName("com.mysql.jdbc.Driver") val connection: Connection = DriverManager.getConnection("jdbc:mysql://localhost:3306/rng_comment?characterEncoding=UTF-8", "root", "root") val sql = "insert into count_conmment values (?,?)" iter.foreach { line: (String, Int) => { val statement: PreparedStatement = connection.prepareStatement(sql) statement.setString(1, line._1); statement.setInt(2, line._2.toInt); statement.executeUpdate() statement.close() } } connection.close() } } } } ssc.start() ssc.awaitTermination() } }

CREATE TABLE vip_rank (

index VARCHAR(32) NOT NULL,

child_comment VARCHAR(312) NOT NULL,

comment_time VARCHAR(322) NOT NULL,

content VARCHAR(332) NOT NULL,

da_v VARCHAR(342) NOT NULL,

like_status VARCHAR(532) NOT NULL,

pic VARCHAR(342) NOT NULL,

user_id VARCHAR(132) NOT NULL,

user_name VARCHAR(2362) NOT NULL,

vip_rank VARCHAR(372) NOT NULL,

stamp VARCHAR(332) NOT NULL,

PRIMARY KEY (index)

) ENGINE=INNODB DEFAULT CHARSET=utf8;

CREATE TABLE like_status (

index VARCHAR(32) NOT NULL,

child_comment VARCHAR(312) NOT NULL,

comment_time VARCHAR(322) NOT NULL,

content VARCHAR(332) NOT NULL,

da_v VARCHAR(342) NOT NULL,

like_status VARCHAR(532) NOT NULL,

pic VARCHAR(342) NOT NULL,

user_id VARCHAR(132) NOT NULL,

user_name VARCHAR(2362) NOT NULL,

vip_rank VARCHAR(372) NOT NULL,

stamp VARCHAR(332) NOT NULL,

PRIMARY KEY (index)

) ENGINE=INNODB DEFAULT CHARSET=utf8;

CREATE TABLE count_conmment (

comment_time VARCHAR(312) NOT NULL,

comment_time_count VARCHAR(322) NOT NULL

) ENGINE=INNODB DEFAULT CHARSET=utf8;

本网页所有视频内容由 imoviebox边看边下-网页视频下载, iurlBox网页地址收藏管理器 下载并得到。

ImovieBox网页视频下载器 下载地址: ImovieBox网页视频下载器-最新版本下载

本文章由: imapbox邮箱云存储,邮箱网盘,ImageBox 图片批量下载器,网页图片批量下载专家,网页图片批量下载器,获取到文章图片,imoviebox网页视频批量下载器,下载视频内容,为您提供.

阅读和此文章类似的: 全球云计算

官方软件产品操作指南 (170)

官方软件产品操作指南 (170)