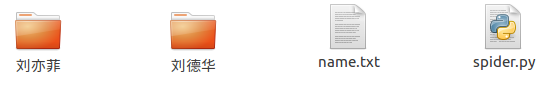

## 深度学习模型训练极大的依赖数据,当数据量不够时,可通过网络爬虫从网上爬取数据。下面以爬取刘亦菲和刘德华图片数据为例介绍爬虫: ## 代码使用步骤如下: 1. 在name.txt中输入你要爬取的关键词,我输入的为刘亦菲和刘德华(不用创建文件夹,文件夹是代码生成的)。 2. 运行代码:python3 spider.py 会提示你输入的数量,结果如下: 3. 结果新建了两个文件夹,刘亦菲和刘德华。 4. 文件夹里的内容如下:

刘亦菲 刘德华

import re import requests from urllib import error from bs4 import BeautifulSoup import os num = 0 numPicture = 0 file = '' List = [] def Find(url): global List print('正在检测图片总数,请稍等.....') t = 0 i = 1 s = 0 while t < 1000: Url = url + str(t) try: Result = requests.get(Url, timeout=7) except BaseException: t = t + 60 #import pdb;pdb.set_trace() continue else: result = Result.text pic_url = re.findall('"objURL":"(.*?)",', result, re.S) # 先利用正则表达式找到图片url s += len(pic_url) if len(pic_url) == 0: #import pdb;pdb.set_trace() break else: List.append(pic_url) t = t + 60 return s def recommend(url): Re = [] try: html = requests.get(url) except error.HTTPError as e: return else: html.encoding = 'utf-8' bsObj = BeautifulSoup(html.text, 'html.parser') div = bsObj.find('div', id='topRS') if div is not None: listA = div.findAll('a') for i in listA: if i is not None: Re.append(i.get_text()) return Re def dowmloadPicture(html, keyword): global num # t =0 pic_url = re.findall('"objURL":"(.*?)",', html, re.S) # 先利用正则表达式找到图片url print('找到关键词:' + keyword + '的图片,即将开始下载图片...') for each in pic_url: print('正在下载第' + str(num + 1) + '张图片,图片地址:' + str(each)) try: if each is not None: pic = requests.get(each, timeout=7) else: continue except BaseException: print('错误,当前图片无法下载') continue else: string = os.path.join(file, str(num) + '.jpg') fp = open(string, 'wb') fp.write(pic.content) fp.close() num += 1 if num >= numPicture: return if __name__ == '__main__': tm = int(input('请输入每类图片的下载数量 ')) numPicture = tm line_list = [] with open('name.txt', encoding='utf-8') as file: line_list = [k.strip() for k in file.readlines()] #import pdb;pdb.set_trace() for word in line_list: url = 'https://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&word=' + word + '&pn=' tot = Find(url) Recommend = recommend(url) # 记录相关推荐 print('经过检测%s类图片共有%d张' % (word, tot)) file = word y = os.path.exists(file) if y == 1: print('该文件已存在,请重新输入') file = word os.mkdir(file) else: os.mkdir(file) t = 0 tmp = url while t < numPicture: try: url = tmp + str(t) result = requests.get(url, timeout=10) print(url) except error.HTTPError as e: print('网络错误,请调整网络后重试') t = t + 60 else: dowmloadPicture(result.text, word) t = t + 60 numPicture = numPicture + tm print('任务完成')

本网页所有视频内容由 imoviebox边看边下-网页视频下载, iurlBox网页地址收藏管理器 下载并得到。

ImovieBox网页视频下载器 下载地址: ImovieBox网页视频下载器-最新版本下载

本文章由: imapbox邮箱云存储,邮箱网盘,ImageBox 图片批量下载器,网页图片批量下载专家,网页图片批量下载器,获取到文章图片,imoviebox网页视频批量下载器,下载视频内容,为您提供.

阅读和此文章类似的: 全球云计算

官方软件产品操作指南 (170)

官方软件产品操作指南 (170)